Veeam Kasten for Kubernetes Instant Recovery with Veeam Backup & Replication vPower NFS datastore

Purpose

Solution

Update storageClasses

Before using Instant Recovery, storageClasses need to be adjusted so that volumes will not be created in the vPower NFS datastore.

There are two ways to control where the vSphere CSI driver will create volumes:

- Named datastores in the storageClass

- Use a SPBM policy that will exclude the vPower NFS Datastore

Below steps for updating the storageClass is applicable for cluster with TKG versions 2.4+.

Older version of TKG cluster doesn't allow modification of the default storageClass in anyway. In such cases, a new storageClass with a different name can be created and used for the PVCs.

Named Datastore storageClass

If there are one or more vSphere datastores that Kubernetes volumes will be allocated in, create storageClasses that name those datastores. This is the simplest way to limit where volumes will be created.

This is a sample storageClass:

allowVolumeExpansion: true apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: default parameters: datastoreurl: ds:///vmfs/volumes/vsan:5292cceaadc3cad8-ef4e5a808d92e8f7/ provisioner: csi.vsphere.vmware.com reclaimPolicy: Delete volumeBindingMode: Immediate

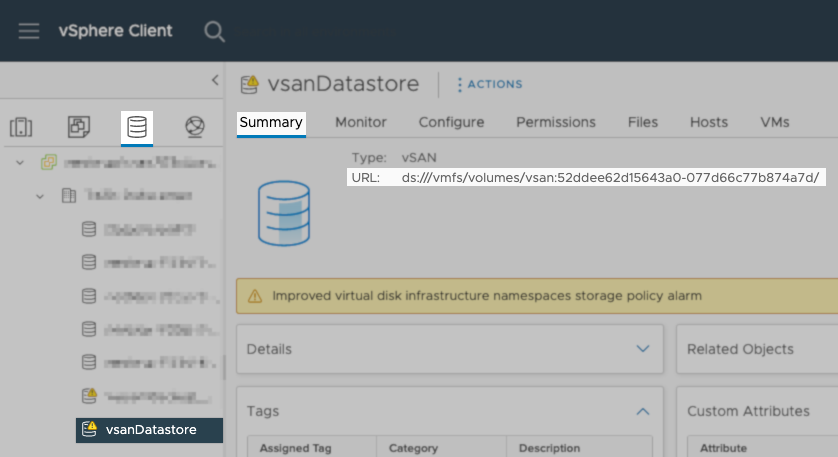

The key change is the addition of the datastoreurl parameter. URL of a datastore can be found by navigating to the datastore within the vCenter UI, as shown in the following screenshot.

SPBM Policy

If it's a complex environment, creating an SPBM policy that does not apply to the vPower NFS Datastore might be a better option.

allowVolumeExpansion: true apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: default parameters: storagepolicyname: "vSAN Default Storage Policy" #Optional Parameter provisioner: csi.vsphere.vmware.com reclaimPolicy: Delete volumeBindingMode: Immediate

Applying a new StorageClass

Once the changes to the storageClass are crafted, they will need to be applied. Depending on the configuration, there are different paths, as shown below.

Regular Kubernetes on vSphere installations

On Kubernetes clusters on vSphere that use the regular CSI driver (not "vSphere with Tanzu" Guest Cluster), update storageClasses using kubectl.

In Kubernetes, a StorageClass is an immutable object once it has been created - therefore, it is not possible to patch or add custom parameters to an existing StorageClass object. In order to update a StorageClass, you need to delete it first and recreate it with the desired changes.

- Download the storageClass using kubectl:

- Edit the storageClass file with your favorite editor and add the datastoreurl parameter or storagepolicyname as shown above

- Delete the original storageClass

- Recreate the storageClass

vSphere with Tanzu installations

Veeam Kasten for Kubernetes Instant Recovery is not supported for vSphere with Tanzu installations at this time.

Recovering From Disks in the vPower NFS Datastore

With K10 versions 6.5.9+, We have a mandatory FCD migration step for the Instant recovery process.

If disks are created by accident in the vPower NFS datastore, they will need to be removed from the datastore. The easiest solution is to simply delete the volumes in question. It is possible to backup and restore applications that have been placed on the vPower NFS Datastore using K10. Before deleting the application namespace, export the backup and remove the snapshots.

Another option is to use vSphere Storage vMotion to move the virtual disks. In order to do this, Kubernetes PVs need to be mapped to their paths in vSphere. Then, determine the VMs that the volumes are attached to. Then use Storage vMotion to move the VMs to the correct datastore.

Make sure to report any accidental volume leaks in vPower NFS datastore by opening a case with Veeam Kasten support.

To report a typo on this page, highlight the typo with your mouse and press CTRL + Enter.

Spelling error in text

KB Feedback/Suggestion

This form is only for KB Feedback/Suggestions, if you need help with the software open a support case