Read the full series:

|

Ch.1 — Backup Server placement and configuration |

We are now ready for the second post in this series, and we will now cover the VMware Backup Proxy. This is truly the workhorse of your backup infrastructure, so this will definitely be of interest to many readers!

Best practices for VMware Backup Proxies

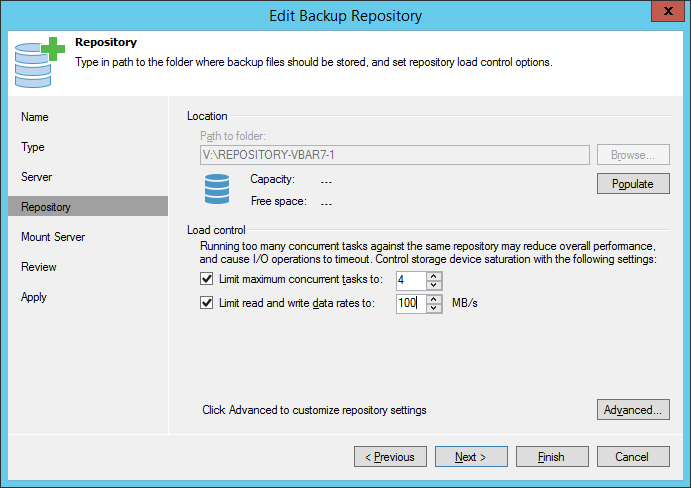

One of the first questions to ask is: “How many proxies should be in use?” The prevailing logic is to that the more proxies that are in place the better the performance and distribution. But, be very careful to ensure that Backup I/O Control is in use. Otherwise, the proxies run the risk of taking too much cycles from the primary storage systems. Conversely for smaller environments, the basic controls on the repository can set throttling and connection limits that will effectively limit what the proxies can transfer. The figure below shows this basic repository control (but the repository will be covered more in the next post of the series) in the properties:

CPU and RAM for the VMware Backup Proxy are greatly controlled by the compression settings used for a backup job. Generally speaking, keep the default compression level (optimal) set in backup jobs or be ready to add resources to the proxies. Also it is worth pointing out that RAM usage on a target proxy of a replication job is something to watch.

Let’s talk transport modes!

Speaking of transport modes, it’s a good idea to have one secondary Hot Add proxy per cluster for restores only. This is very important when you consider you may be doing a backup during the time when you need to do a restore! Meaning, if you have designed a requisite number of proxies for backups but you have to do a restore during that time, you need more resources and connectivity to do that task and you don’t want to have to cancel or delay a backup job to do a restore.

In regards to transport modes, there are four choices in a Veeam VMware Backup Proxy:

- Automatic

- Direct storage access

- Virtual appliance (Hot Add)

- Network (NBD)

Direct Storage Access transport mode

The setting of how a proxy is to move data is set as a property of the proxy, and then each job can be configured to use one or more proxies. Generally speaking, direct storage access is recommended as it will use Direct SAN Access or Veeam’s proprietary Direct NFS Access. Here are a few tips on VMware Direct SAN Access in regard to proxies:

| The Good | The Bad | The Safe Way |

| Fastest Backup and Full VM Restores (except Backup from Storage Snapshots). | Requires physical backup proxy server for fiber channel and be against virtualization initiative. VM supported for iSCSI SAN however. | Keep Local Administrator rights on backup proxies to yourself. |

| Zero impact on hosts and production networks. Dedicated backup proxies grab required data directly from SAN and the backup traffic is isolated to SAN fabric. | Hard to set up for beginners and to tune to work fast. Common misconfigurations are around MPIO setup, misbehaving HBAs and non-optimal RAID caching. | Worried about resignaturing? (Almost never happens and Veeam setup puts in preventions) Present VMFS LUNs to backup proxy as read-only. |

| Most reliable due to direct data path. | Direct SAN restore is supported for thick disks only. | Still worried about resignaturing? Foolproof by disabling Disk Management snap-in with Group Policy. |

Additionally for Direct SAN Access, here are a few additional best practices:

- If you have to decide between more proxies with fewer CPU or fewer proxies with more CPU: Choose fewer proxies with more CPU as it reduces the physical footprint

- Remember to zone and present all new LUNs to the backup proxies as part of the new storage provisioning process. Otherwise, the backup job will fail over to another mode

- Use manual datastore to proxy assignments

- Forgo Direct SAN Access if you can use Backup from Storage Snapshots or Direct NFS

- Use thick disks while doing thin provisioning at the storage level to enable Direct SAN restores

- Update MPIO software (disable MPIO may increase performance)

- Update firmware and drivers across the board (proxies and hosts!)

- Find the best RAID controller cache setting for your environment

- If you are using iSCSI check out these Veeam Forum threads about increasing network performance, about the VddkPreReadBufferSize DWORD value and Netsh tweaks.

For NFS environments that aren’t supported for the Backup from Storage Snapshots Arrays, be sure to use it! It’s highly optimized and really a second generation of our NFS Client (it was really “born” from our NetApp integration in 2014).

Hot Add transport mode

The hot add transport mode (also known as virtual appliance mode) can only be used if the proxy is virtualized. It has a characteristic of being easy to set up, but has these other attributes as well for Veeam backups:

| The Good | The Bad & Ugly |

| Fast, direct storage access for backups but through the ESXi I/O stack. | Proxies consume resources on vSphere cluster (including adding vSphere licenses). |

| Provides fast, classic full VM restores. | The hot add process is slow to start due to changes to configuration to run (one-two minutes per VM) which can add up. |

| Supports any vSphere storage type. | Disables CBT on backup proxy server. |

| You can use existing Windows VMs as proxies to save licensing. | #1 source of stuck (Consolidation Needed) and hidden snapshots. |

| Helps you be 100% virtual in deployment and a good choice for all-in-one installations (ROBO). | NFS environments see extended stun on hot remove |

In regard to hot add proxies in local storage clusters, a single proxy per host is recommend for the best performance (will ensure hot add is an option). If a shared storage system is in use, it is recommend to have at least one proxy in the cluster.

Network transport mode

Network mode is a mode that is very versatile in that it will work in any situation. Like each of the prior modes, it also has characteristics that need to be taken into consideration. Let’s start with the good and the bad for network mode:

| The Good | The Bad & Ugly |

| The easiest setup possible, in fact no setup. It just works! | Leverages the ESXi management interface. |

| Supports installations as both physical or virtual machines. | Has a remote chance of impacting management traffic. |

| Very quick to initialize the data transfer, so it’s ideal to backup VMs with very little change rate. | The management interface is throttled by vSphere. |

| Enables 100% virtual installation and deployment. | Painfully slow on 1 Gb Ethernet, especially NFS. |

| Can be quite fast on 10Gb Ethernet networks! | Normally 10-20 MB/s at best for backups and restores. |

Network mode transport also has a number of additional tips for backups and restores. Here is a top list you can use:

- Generally speaking, network mode is recommended with 10 GB Ethernet.

- It’s perfect for small sites and static data sets with low change rates.

- You can force jobs to specific proxies to avoid unexpected proxies being selected.

- Keep the ILB (internal load balancing) logic transport modes in mind regarding job proxy setup: Proxies set to explicitly use network mode have the lowest priority.

- Hold on to a proxy (as in previous tip) set to be a hot add proxy as full VM and full disk restore over network mode (especially over 1 Gb) would be painful.

This is a great recap of some of the most critical v9 proxy best practices. Stay tuned for more posts in this series! Until then, here are some resources you can use to supplement this blog post: