Hey Veeam fans, today we are back with another blog post! This time, let’s have a look at some basics of Hyper-V Storage management. As this is a big topic, I’m going to limit the scope of this post to the management of Hyper-V .BIN files and their impact on your environment. So, are you ready? Then let’s get started with the most basic concept of a Hyper-V Host and some basic storage to place the virtual machines as seen in the illustration below.

When a VM is created, it has some core components that are required for it to start in Hyper-V:

- Hyper-V configuration files

- Hyper-V virtual hard disks

- Hyper-V .BIN file (page file)

- Snapshots (checkpoint) files

Note: Although when creating a VM these locations can be split up into different locations it is not my best practice. It’s a lot easier to manage Hyper-V VMs if they are stored in a single, consistent location.

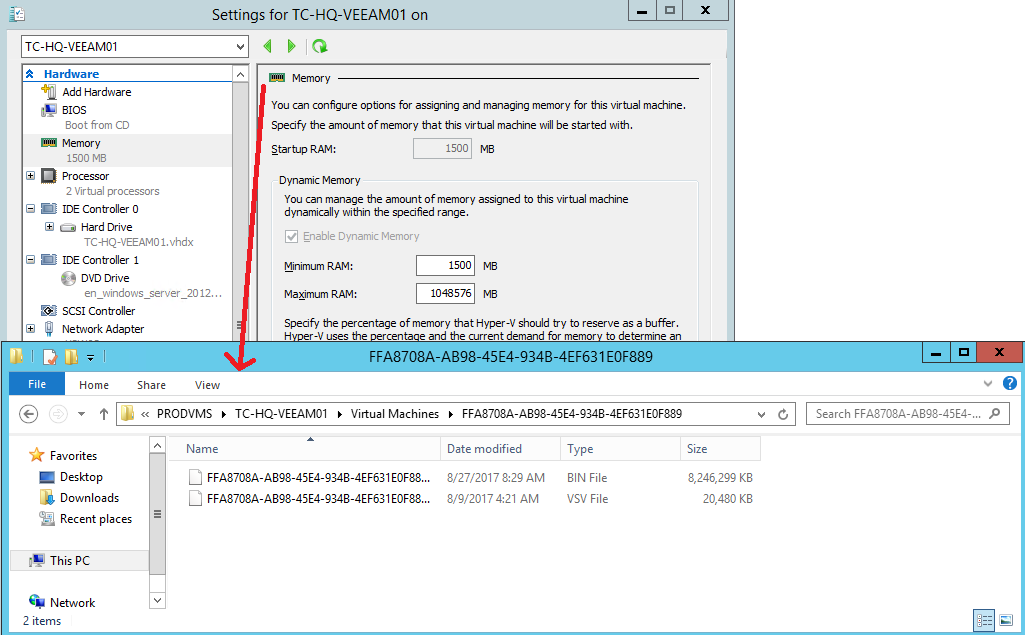

Digging in a bit deeper, what you are looking at in the image above is a VM called TC-HQ-VEEAM01. It’s running on a standalone Hyper-V Host and has a single, fixed 99 GB VHDx. There are currently no snapshots (checkpoints) created for it, so you can assume this VM will consume approximately 99GB of space. However, this is only true if the VM is off. Now I know you are asking yourselves why is there a difference between a VM that is off and a VM that is turned on? The answer lies in the Hyper-V .BIN file, which will always equal the amount of RAM that is being consumed by the VM. In our example, this VM utilized Hyper-V Dynamic Memory, where it can consume between 1500 MB to 1048576 MB (assuming this much RAM was available on the Host).

When planning your Hyper-V environments, it is imperative to build in the amount of space required for the Hyper-V BIN files and leave enough reserve on your volumes to account for this. What we see in the field is a scenario like this. Customer X is running a small standalone Hyper-V Server and it has been running great for the past year. He started with a 600 GB volume to store his 10 VMs on this host. The Hyper-V Host Server itself has a single processor and 128 GB of memory. Each VM uses approximately 10 GB of statically assigned memory. This means, as we learned above, we would require a minimum of 100+ GB of free disk space on the volume to start the VMs.

When our administrator configured this environment, there was 50% free space available after the VMs were configured and everything was running. Over time, on any server things get copied onto volumes, file servers grow in size and we run ourselves out of disk space. This is a common occurrence that I still find my Hyper-V administrators dealing with today.

Fast forward this discussion to 18 months ahead — now his volume looks something like this:

As you can see from the screenshot above, we are down to 3.32GB of free space on this volume. Funny enough though, Hyper-V is working just fine. We haven’t taken any outages nor has the business complained of any outages. This is because the Hyper-V Host Server hasn’t had the VMs turned off. When the VMs are turned off, this 100+ GB of disk space is released and as you can see in the screen shot below we have quite a different picture.

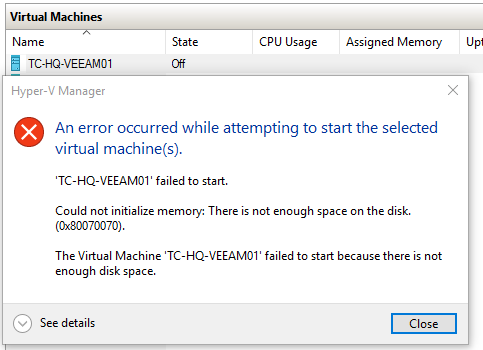

What our Hyper-V administrator is about to do is a very common mistake when managing Hyper-V Storage. That is copying additional files to this volume like .ISO or additional VMs including their VHD files. As he does this, he is going to run out of his reserve space, and suddenly our admin finds himself unable to start VMs because there isn’t enough space left on the volumes to recreate the Hyper-V BIN files.

Now that you have finished reading this short post on Hyper-V Storage Management, I hope that you remember to leave enough reserve space on your Hyper-V Host Servers and can avoid ever having this issue.

Thanks, and happy learning.

See Also: