Regardless of what hypervisor you use (I have experience in both Hyper-V and vSphere technologies), I see one common administrative practice: Stationary designs. This feels natural: Why change what you know works?

Now, don’t go out and Bing search “stationary designs,” as you will quickly see some very nice and modern images for paper and related products. What I mean by stationary designs is that the core practices and principles of the virtualization environment haven’t really changed. I am usually reluctant to change my storage volume preferences (such as a file system) and generally reluctant to change how I deploy virtual machine (VM) disks (in particular, fixed size or dynamic size).

There is one set of technologies I’d like to highlight in this blog post that I think can make a big difference in how you look at Hyper-V infrastructures in many areas:

- Designing a cluster (or host)

- Running and administering VMs

- Deploying VMs

ReFS vs NTFS

There are many changes that can be brought from ReFS. ReFS, or Resilient File System, is a storage technology introduced in Windows Server 2012 (with new features and improvements ever since). And, I’ll agree it may take a lot of convincing to move away from NTFS for hosting Hyper-V VMs, but if you haven’t read this TechNet blog, please do! This blog is the gateway to the opportunity to do Hyper-V the right way with Windows Server 2016 today.

When ReFS file system is used for Hyper-V, two immediate speed and efficiency benefits can be had. The first is that now VM checkpoints are done via a metadata update, so they are incredibly fast on disk — within Hyper-V 2016, this is called Production Checkpoints. The second immediate benefit is when provisioning fixed size VHD or VHDX files, Refilient File System (ReFS) can be put to work for you as well. One of the best Hyper-V bloggers and fellow Microsoft MVP, Aidan Finn, has a post on an early preview that outlines how provisioning a 500 GB VHDX file on NTFS took 2441 seconds (over 40 minutes) and the same size VHDX on ReFS took 13 seconds. This feature within Hyper-V 2016 is known as Instant Fixed Disk Creation. These two brand new to Windows Server 2016 benefits are incredible!

These two benefits will change how you design your Hyper-V hosts and clusters, as well as how you run, administer and deploy VMs. Let’s take a look at how this will positively change the process:

Designing a cluster

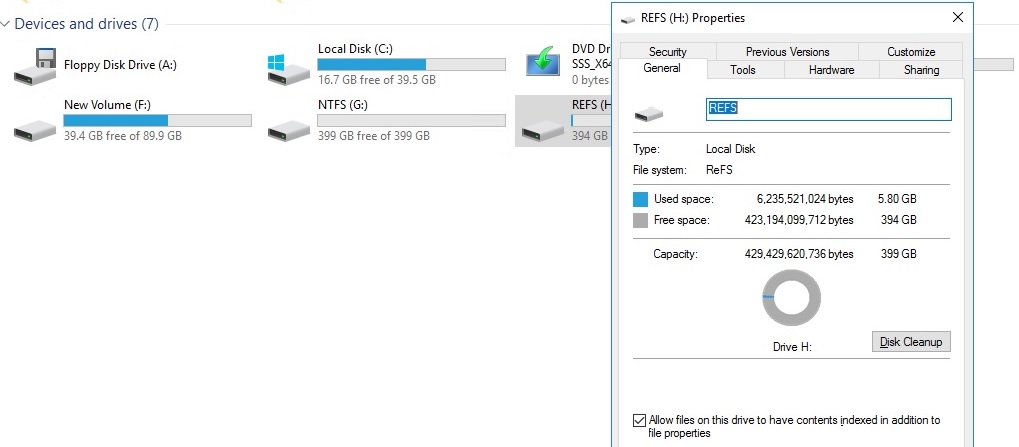

A part of the storage provisioning process is the actual formatting of the volume with ReFS. Now this is quite simple, however, Resilient File System (ReFS) is NOT (yet) the default option when you format a volume — NTFS still is. The ReFS volume sits alongside other volumes and isn’t too distinguishing until you select the properties of it. Another good idea is to name the volume in a self-documenting manner. The figure below is “REFS” alongside an “NTFS” volume. Other examples could be a name like “REFS VM Storage” for example. Here is a Resilient File System (ReFS) volume:

Running and administering VMs

This area of Hyper-V benefits from ReFS file system by allowing checkpoints to do storage operations on disk by only updating metadata, which is known as Production Checkpoints. Moving blocks around from a checkpoint on a running VM will create significant I/O. The metadata updates provided by ReFS make things much more effective. For instance, Microsoft never recommended using checkpoints on production VMs ever! This is a completely different story in Windows Server 2016. You can now comfortably leverage the Production Checkpoint feature at any time. Backup operations are also improved upon as well. You can also call Hyper-V checkpoints from PowerShell if you have a need to do them more often or if you just like to automate common tasks.

After you read the next section, I am also confident that how you monitor VM storage will change with ReFS. This will change the administration practice for ReFS (Resilient File System) volume storage consumption.

Deploying VMs

Now that fixed size VHDX drives can be done nearly instantly, this changes things substantially. Even personally, I would always take a dynamic size VHDX drive because it was quicker and easier. Now, I know that fixed size is better for performance, however, I get impatient easily, and because of that, the dynamic size satisfied the lazy administrator in me that wanted to get things deployed quickly. But what if we don’t have to wait for fixed sized disks any longer? ReFS file system gives us this opportunity.

The situation changes more than just getting a VM deployed, and it comes back to the storage associated with administering a VM. When dynamic size is used, we now have to worry about two types of oversubscription: On the whole physical storage volume and also within the guest VM. Monitoring oversubscription is one thing, but when they fill up is another.

There is a problem when things aren’t tended to properly. Imagine the following:

- A storage volume with running production Hyper-V VMs

- The guest VHDX drive(s) are set to dynamically grow

- The CSV / SMB3 storage volume fills up because it’s been oversubscribed

You see the issue here: Dynamic drives can fill up eventually, and if there are many VMs on this volume, the impact can be significant. Leveraging the ReFS capability to fully allocate the storage within the guest drive can help avoid that potential problem. We have enough to worry about on the guest operating system; let’s keep the host in good shape by using fixed size, fully allocated Hyper-V VMs.

Are you ready for Resilient File System (ReFS)?

Are you convinced that Resilient File System (ReFS) can change a lot? I believe it can. Have you converted any of your volumes to ReFS? Share your comments below.

See Also:

- Advanced ReFS Integration with Veeam Overview

- Veeam Agent for Microsoft Windows

- Experience the Magic that Windows Server 2016 and ReFS Provide to YOUR Next Backup Repository